I am a Research Scientist at Google DeepMind, based at NYC.

I received my Ph.D. in Computer Science from Cornell University, where I was advised by Claire Cardie, with additional mentorship from Thorsten Joachims, Kianté Brantley, Daniel D. Lee and many other amazing collaborators. During my Ph.D., I also interned at Microsoft Research, working with Dipendra Misra. Prior to Cornell, I worked with Luke Zettlemoyer and Yejin Choi at the University of Washington.

[Google Scholar] [Semantic Scholar] [GitHub] [Twitter]

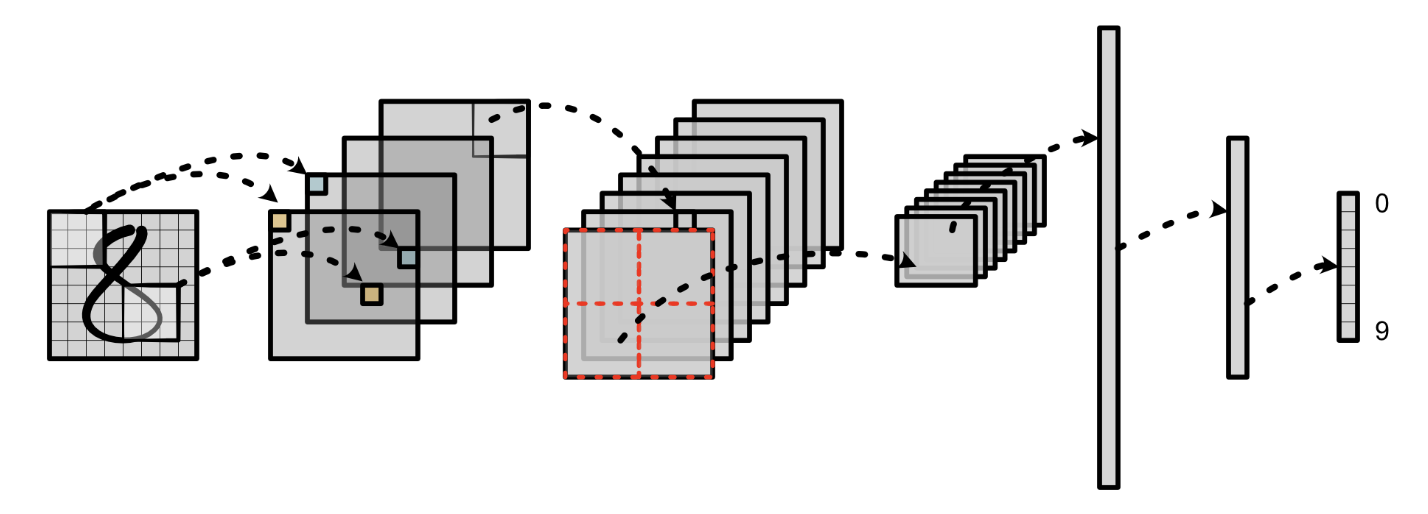

Check out our MiniTorch project – a Python re-implementation of the Torch API. It is designed as a teaching library to teach machine learning engineers 1) core deep learning concepts such as tensor operations, autograd, and CUDA programming, and 2) how to engineer machine learning systems to be correct, robust, and fast. We built this DIY deep learning library primarily for the Machine Learning Engineering course at Cornell Tech.

@01/2025 Talk at Microsoft.

@12/2024 Poster presentation at NeurIPS 2024 in Vancouver.

@12/2024 Talk at Berkeley. [slides]

@11/2024 Talk at Meta.

@11/2024 Talk at Google.

@10/2024 Poster presentation at 15th Annual Machine Learning Symposium in NYC.

@10/2024 Attending COLM 2024 in Philadelphia.

@10/2024 Talk at NYU.

@09/2024 Talk at Princeton University.

@09/2024 Talk at Columbia University. Recording is available online.

Research

|

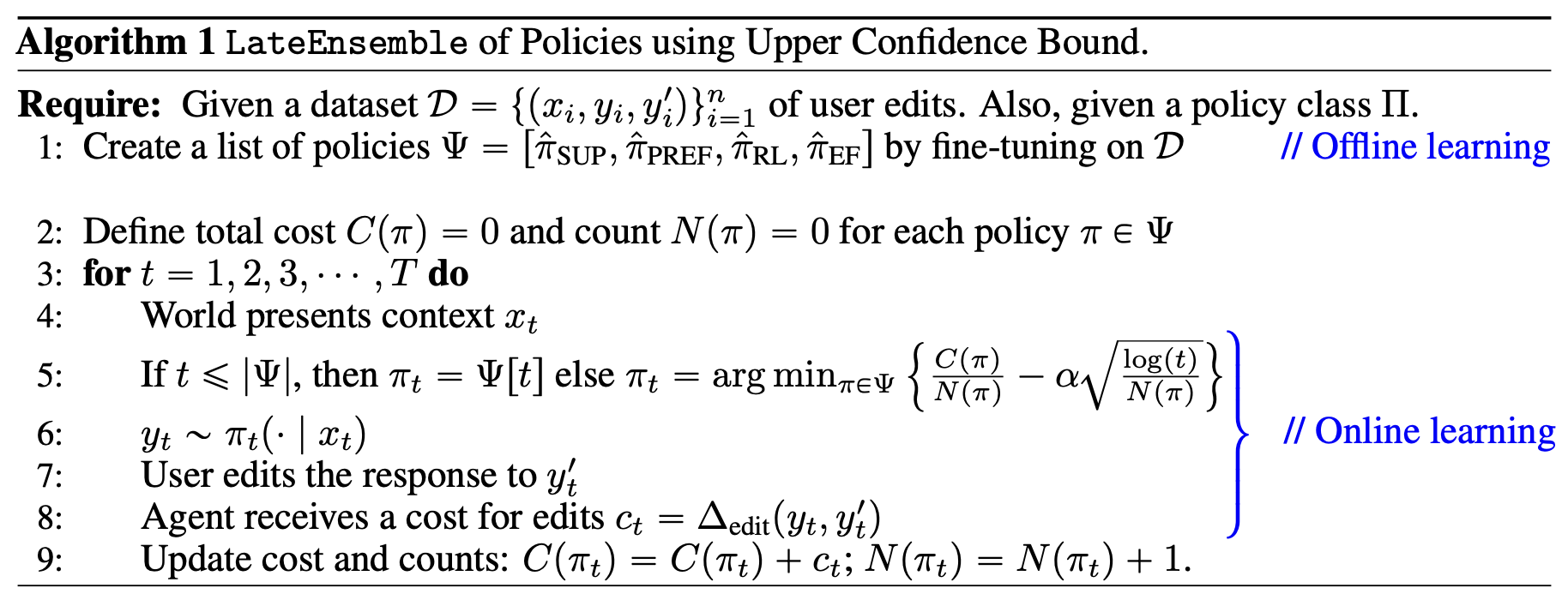

Principled Fine-tuning of LLMs from User-Edits: A Medley of Preference, Supervision, and Reward Dipendra Misra*, Aldo Pacchiano*, Ta-Chung Chi, and Ge Gao |

|

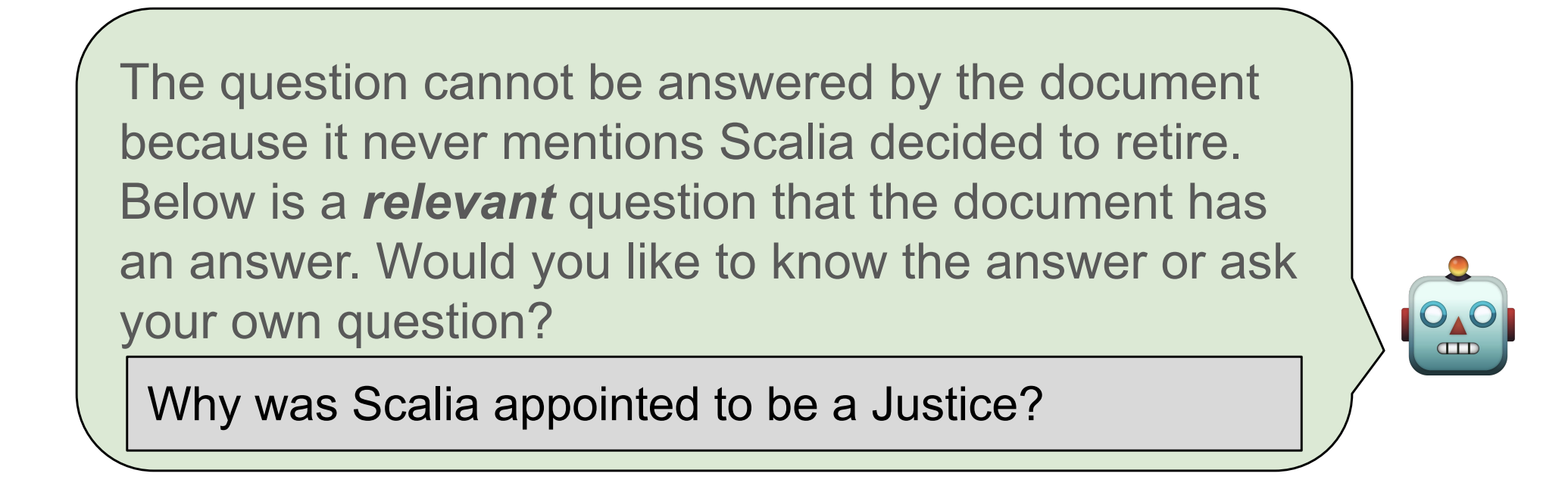

I Could’ve Asked That: Reformulating Unanswerable Questions Wenting Zhao, Ge Gao, Claire Cardie, and Sasha Rush |

|

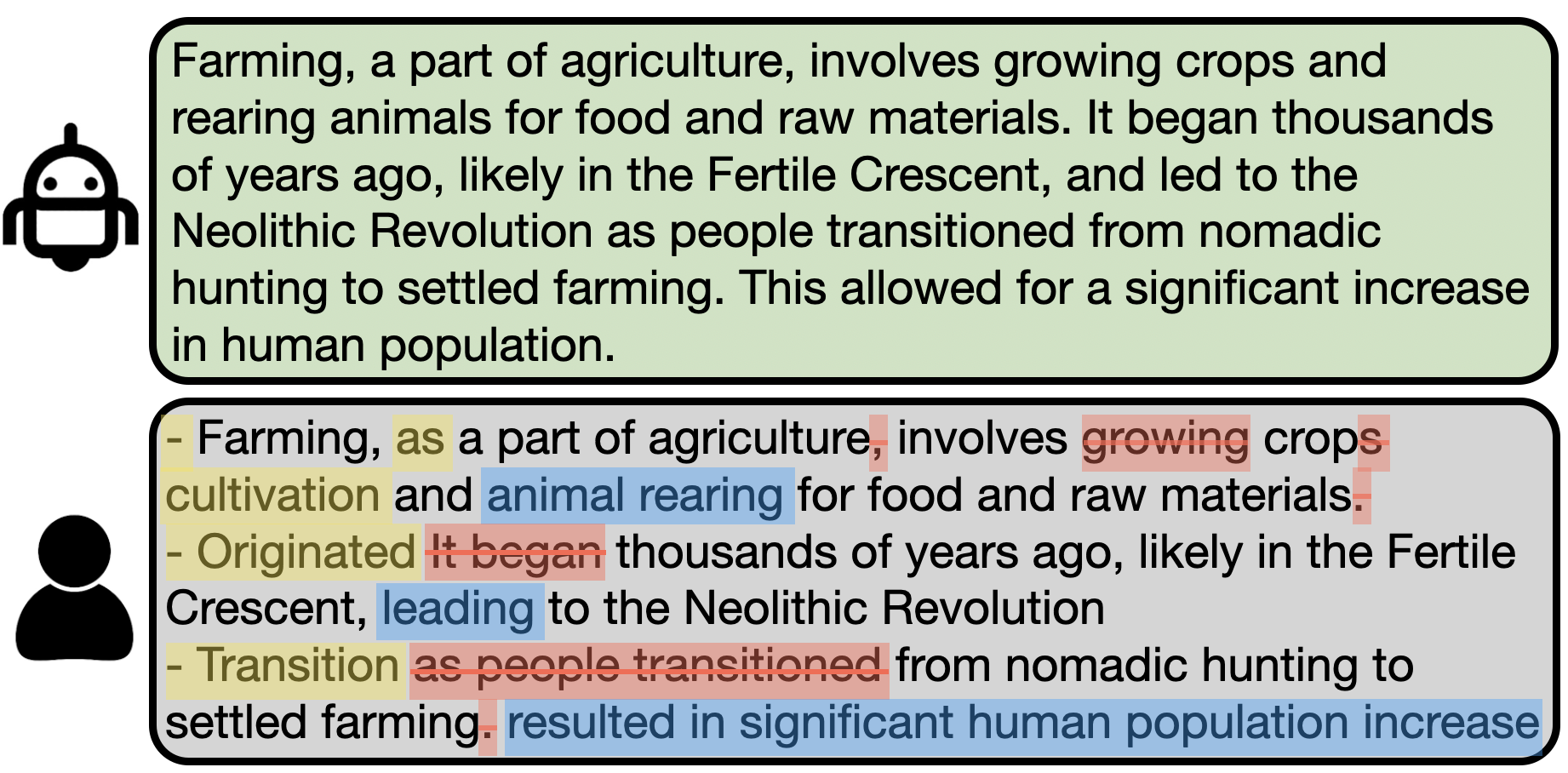

Aligning LLM Agents by Learning Latent Preference from User Edits Ge Gao*, Alexey Taymanov*, Eduardo Salinas, Paul Mineiro, and Dipendra Misra |

|

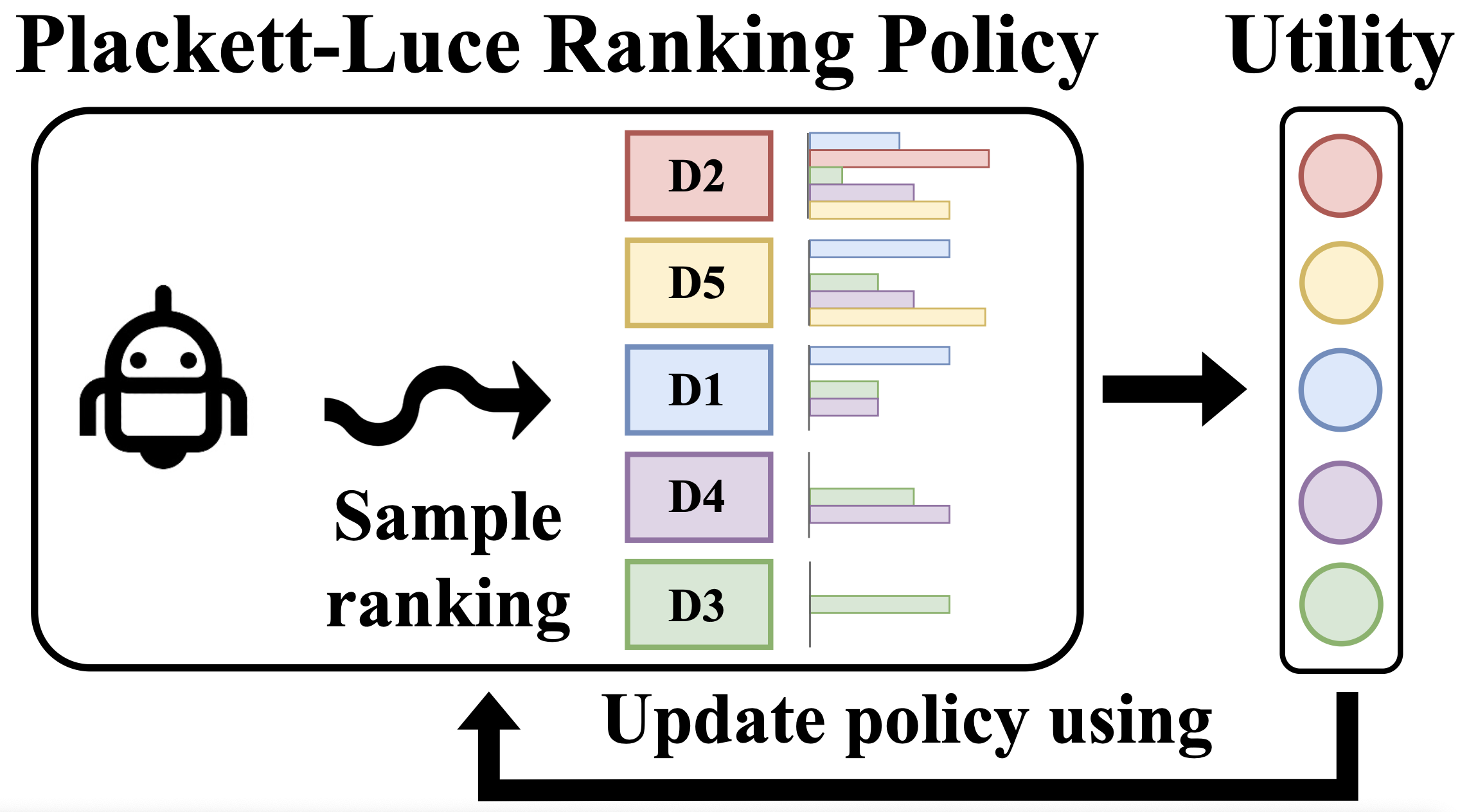

Policy-Gradient Training of Language Models for Ranking Ge Gao, Jonathan D. Chang, Claire Cardie, Kianté Brantley, and Thorsten Joachims |

|

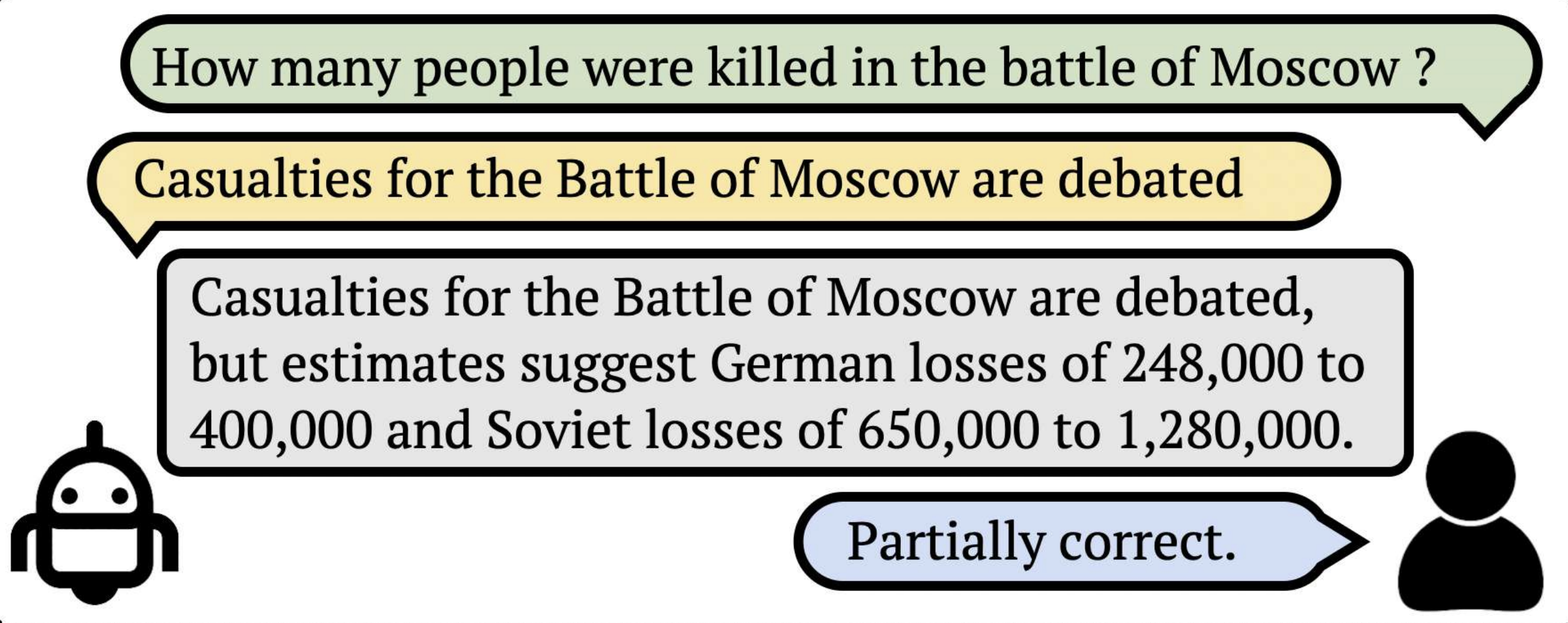

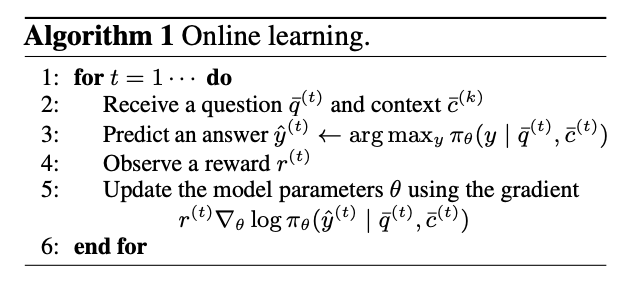

Continually Improving Extractive QA via Human Feedback Ge Gao*, Hung-Ting Chen*, Yoav Artzi, and Eunsol Choi |

|

Simulating Bandit Learning from User Feedback for Extractive QA Ge Gao, Eunsol Choi, and Yoav Artzi |

|

MiniTorch: Teaching Library for Python Re-implementation of the Torch API Sasha Rush, Ge Gao, Anton Abilov, and Aaron Gokaslan |

|

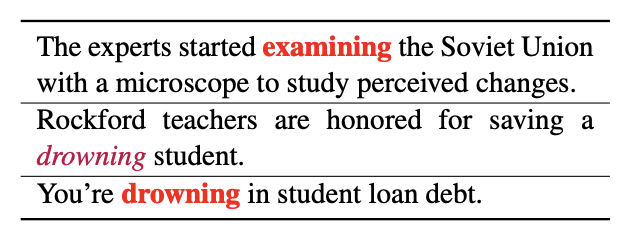

Neural Metaphor Detection in Context Ge Gao, Eunsol Choi, Yejin Choi, and Luke Zettlemoyer |

* denotes equal first authorship.

Contact

ggao asperand cs dot cornell dot edu

Misc

- You could pronounce my first name as /gə/ or G.

- If interested, read more about my full name in classical Chinese literature.